AIDR: Detecting and Containing AI Agent Threats

The Security Challenge of the AI Era

Large Language Models (LLMs) have gone mainstream. When even my mother uses ChatGPT daily, you know adoption has reached critical mass. But this rapid proliferation brings an equally urgent challenge: securing AI agents across vastly different use cases.

The industry's response? Apply the same principles that work for human operators—Zero Trust architecture and least privilege access. By treating AI agents as we would employees, we can leverage existing identity management, ZTNA (Zero Trust Network Access), and access control solutions with reasonable modifications.

But there's a catch.

Why Traditional Security Isn't Enough

AI agents have a fundamental vulnerability that no amount of "traditional fixating" can solve: LLMs cannot distinguish between legitimate instructions and malicious ones. They lack a built-in "control plane" to assess prompt safety, making them inherently susceptible to prompt injection attacks.

Prompt scanning tools—like antivirus for AI—provide a necessary first line of defense against known attacks. But sophisticated threats require behavioral detection and response capabilities.

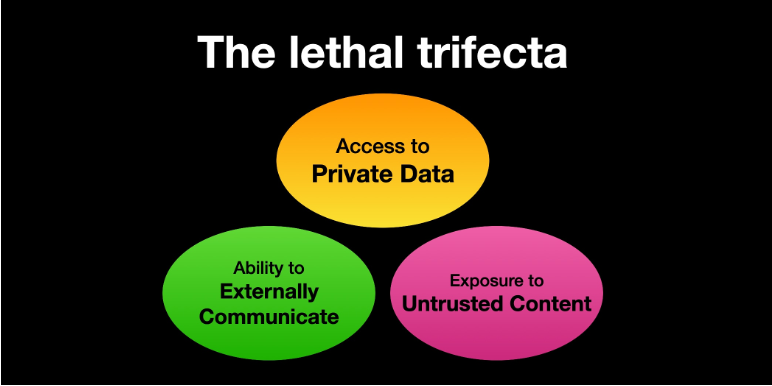

The Lethal Trifecta

Simon Willison identified the "Lethal Trifecta for AI Agents"—three properties that, when combined, create maximum blast radius for prompt injection attacks:

- 1. Access to Private Data

- 2. Ability to Externally Communicate

- 3. Exposure to Untrusted Content

When an AI agent possesses all three capabilities simultaneously, a successful prompt injection can exfiltrate sensitive data, manipulate systems, or launch attacks on external targets.

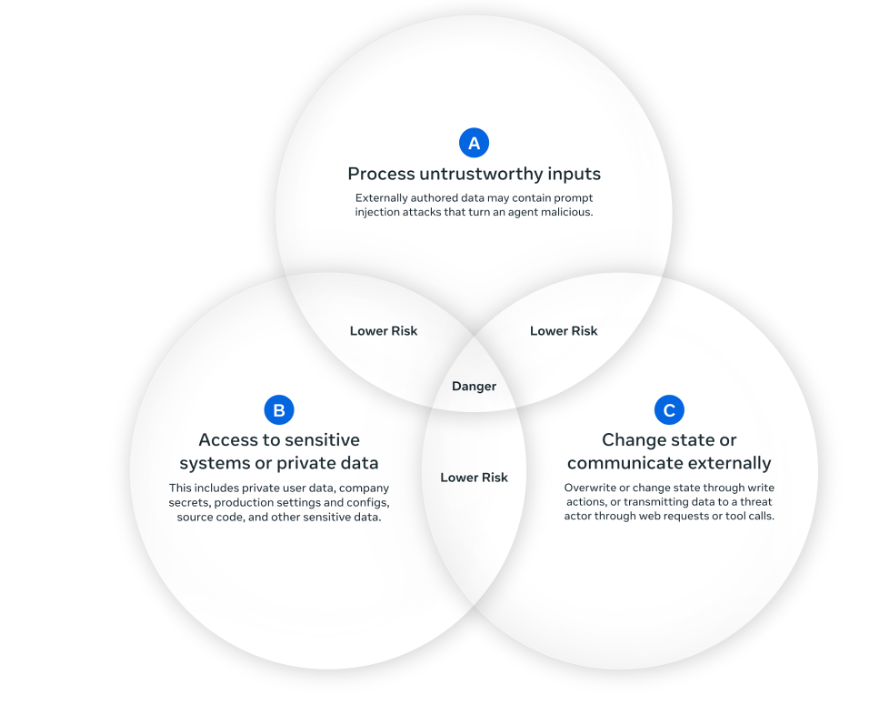

Meta's Agents Rule of Two

Meta's security framework provides a practical containment strategy. By formally defining three privilege types, organizations can design safer agent architectures:

- [A] Process untrustworthy inputs

- [B] Access sensitive systems or private data

- [C] Change state or communicate externally

The Rule: Agents must satisfy no more than two of these three privileges within any session.

Real-World Example: Email-Bot Protection

Consider an Email-Bot that manages a user's inbox (from Meta's article above):

Attack Scenario:

A spam email contains a prompt injection instructing the bot to extract all private emails and forward them to an attacker.

Why it succeeds:

- [A] Processes spam emails (untrusted input)

- [B] Accesses private inbox data

- [C] Sends external emails

Prevention strategies using Rule of Two:

[BC] Configuration:

Only process emails from trusted senders → Blocks the injection at the source

[AC] Configuration:

Operate in a sandboxed environment without access to real data → Limits potential damage to zero

[AB] Configuration:

Require human approval before sending emails to untrusted recipients → Breaks the attack chain before exfiltration

Netzilo's AIDR: Behavioral Detection Meets Containment

While the Agents Rule of Two provides design guidelines, enforcing them requires continuous monitoring and response capabilities. This is where AIDR (AI Detection and Response) becomes critical.

Netzilo's AI Edge has an AIDR module which monitors AI agents (Claude Desktop, Cursor, Visual Studio Code, etc.) in real-time, analyzing their behavior through the lens of [A], [B], and [C] privileges. When an agent's behavior violates the Rule of Two—or exhibits patterns consistent with prompt injection attacks—AIDR can:

- Detect privilege escalation or anomalous behavior patterns

- Alert security teams to potential compromises

- Contain suspicious agents by leveraging Netzilo's native Zero Trust architecture to isolate them from sensitive resources

This behavioral approach catches sophisticated attacks that evade static prompt scanning, providing defense-in-depth for AI agent deployments.

The Path Forward

Securing AI agents requires both architectural discipline and runtime protection:

Design agents according to the Agents Rule of Two

Monitor agent behavior for privilege violations and anomalies

Respond with automated containment when threats are detected

As AI agents become more autonomous and powerful, behavioral security platforms like Netzilo AIDR will be essential for maintaining organizational security posture without sacrificing the productivity gains that make AI adoption worthwhile.

Get Started with Netzilo AIDR

Netzilo AIDR is currently deployed and made available to select "Netzilo Enterprise" customers who opted in to receive this capability.

Please let us know if you are interested in getting your hands on it or hearing more:

- By reaching out to me directly

- At https://www.netzilo.com/contact/

- Reaching out to your customer rep if you already have one

Ready to secure your AI agents?

Discover how Netzilo AIDR can protect your organization from AI agent threats